what is decision tree, decision tree is supervised machine learning algorithm in which each node represent attribute and relationship of parent-child show the categorical value of parent attribute and child attribute is outcome of parent. In simple words you can classify your whole dataset in tree form that tree help you to understand relationship between attributes and also predict things, for example if you make decision tree from weather dataset that rain will happen (yes or no). then it can predict rain information for you if you give any data to it. So advantage of decision tree is training the data, create model and from that model you can take decision or make prediction.

How to create decision tree using id3 algorithm in java, to create decision tree using id3, you must know 4 things before

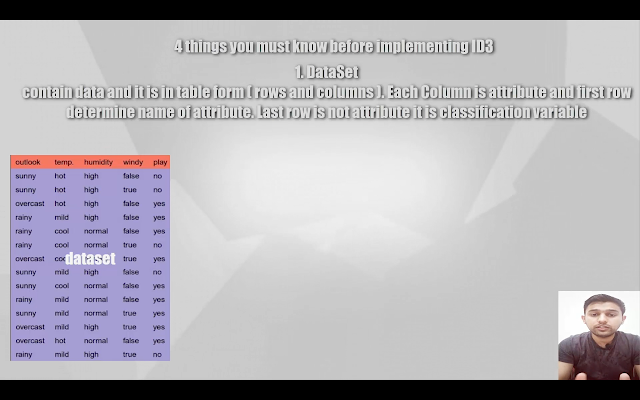

1. what is dataset ?

2. what is entropy ?

3. what is information gain ?

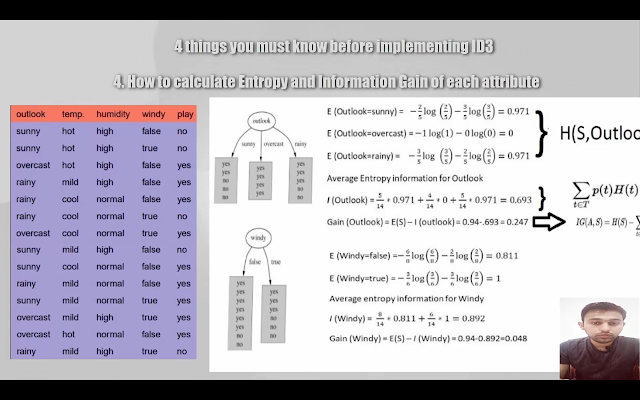

4. How to calculate entropy and information gain ?

Download Java Source code (create decision tree using id3)

A dataset contain data in table form (rows or column). Each column is attribute except last column is class (classification variable). Entropy is the measure of randomness or different categorical values in each attribute. Information Gain is the change or difference of entropy information.

How to calculate entropy and information gain, first you have calculate last column (classification variable) entropy. First check total number of categorical values, in my case i have 2 values that is yes or no. As you see in picture above the dataset or formula is given. Total number of rows is 14 and total number of yes in last column is 9 or no is 5. Apply formula on both categorical values (yes, no) and sum the result, that is entropy weather dataset.

Now calculate entropy of each attribute, as you see in above picture it calculate entropy of outlook attribute then it calculate average entropy information for outlook. Information Gain of outlook is equal to difference of entropy of weather (dataset) and average entropy information of outlook. So first find entropy of weather (dataset) and average entropy information of attribute then you can find information gain.

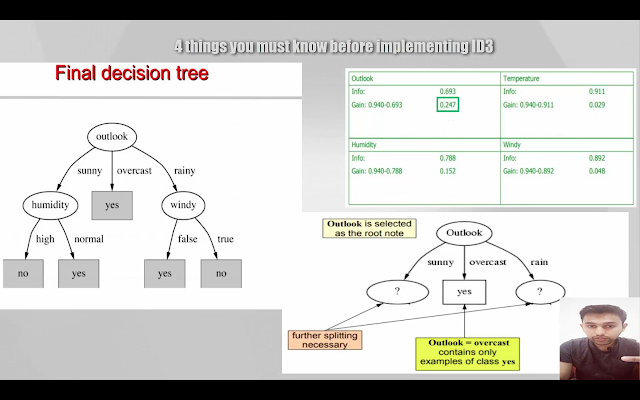

After getting information gain of every attribute, how to create decision tree. First you have to know that

1. how many node will be in decision tree ?

2. what will be root of decision tree ?

3. how to add attribute to decision tree ?

The total number of nodes in decision tree is equal to total number of attribute - 1 ( skip the attribute with lowest information ). The attribute with highest information gain will be root of decision tree. You will add attributes in descending order of information gain and in this way, you will add attribute to decision tree.

As you can see above picture, outlook is has highest information gain of 0.247, so it set as root of tree, then outlook has 3 categorical values(sunny, overcast, rainy). The entropy of overcast is zero and only contain yes, so i set as yes. Second or third highest information gain is humidity with 0.152 and Windy with 0.048. I will skip temperature attribute because its last attribute with lowest information gain.

You can download source code in java with 3 dataset examples

1. Restaurant

2. Weather

3. Iris

Download Java Source code (create decision tree using id3)

|

| Dataset |

A dataset contain data in table form (rows or column). Each column is attribute except last column is class (classification variable). Entropy is the measure of randomness or different categorical values in each attribute. Information Gain is the change or difference of entropy information.

|

| Entropy formula |

How to calculate entropy and information gain, first you have calculate last column (classification variable) entropy. First check total number of categorical values, in my case i have 2 values that is yes or no. As you see in picture above the dataset or formula is given. Total number of rows is 14 and total number of yes in last column is 9 or no is 5. Apply formula on both categorical values (yes, no) and sum the result, that is entropy weather dataset.

| |

|

Now calculate entropy of each attribute, as you see in above picture it calculate entropy of outlook attribute then it calculate average entropy information for outlook. Information Gain of outlook is equal to difference of entropy of weather (dataset) and average entropy information of outlook. So first find entropy of weather (dataset) and average entropy information of attribute then you can find information gain.

After getting information gain of every attribute, how to create decision tree. First you have to know that

1. how many node will be in decision tree ?

2. what will be root of decision tree ?

3. how to add attribute to decision tree ?

The total number of nodes in decision tree is equal to total number of attribute - 1 ( skip the attribute with lowest information ). The attribute with highest information gain will be root of decision tree. You will add attributes in descending order of information gain and in this way, you will add attribute to decision tree.

| |

|

As you can see above picture, outlook is has highest information gain of 0.247, so it set as root of tree, then outlook has 3 categorical values(sunny, overcast, rainy). The entropy of overcast is zero and only contain yes, so i set as yes. Second or third highest information gain is humidity with 0.152 and Windy with 0.048. I will skip temperature attribute because its last attribute with lowest information gain.

You can download source code in java with 3 dataset examples

1. Restaurant

2. Weather

3. Iris